Going a Step Further with Google's Art Doppelgängers

- Freddy Dopfel

- Jan 20, 2018

- 3 min read

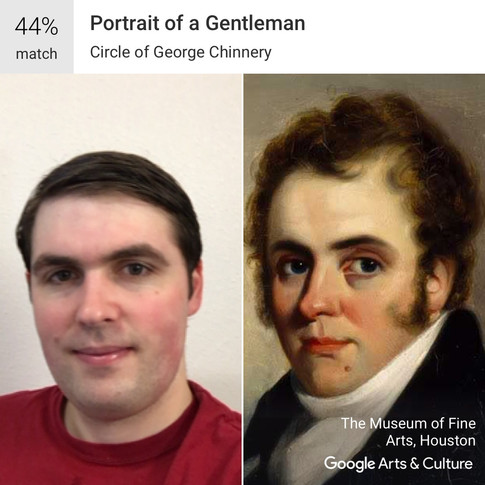

This past week, Google released an update to its Google Cultural Institute mobile app that allows users to upload selfies and automatically matches them to faces found in works of art. The app (iOS and Android download here) quickly went viral and people began sharing their selfies and fine-art doppelgängers. The system likely uses the same neural network that Google designed for face matching in Google Photos, but I believe that the process can be further improved.

I've experimented with convolutional neural networks before to perform neural style transfer, a method of capturing the artistic style of a painting and applying it to a photo (read more in my blog post) and with deepdream, a method of iteratively running convolutional networks and generating features (read more here). What if I could use my existing neural style transfer program to apply the style of my doppelgänger's painting onto my own photo? I had some pretty low expectations, especially since the style transfer seems to have a lot of trouble with faces, often deforming them, but gave it a shot anyway.

Well, my computer has been running all day and night for the past few days, acting as a nice electric space heater while it back propagates the network for these photos, and I finally have some results.

In most cases, the features of the painting were very difficult to transfer. The majority of the style transfer appeared to be color and shade transfer, possibly because there wasn't quite enough data on the training image to derive a "style". In general, faces are an issue for neural style transfer as a whole, and this experiment appears to be no exception. Then I had another idea: What would happen if I reverse the process? Instead of stylizing a photo of myself with the style of artwork, what if I try to stylize the artwork to look more like a photo of myself?

The neural style algorithm isn't really designed to transfer photo styles to artwork, so I had some pretty low expectations, but the results were still interesting nonetheless. I can't say that the paintings look any more like me, but tyou can definitely see that the shade of the skin is closer to mine, and in some cases there are some very obvious artifacts of my photo (such as the pattern of my shirt) implemented onto the painting.

But given that this is a project about creating artwork, I thought I would continue trying at it until I found something that wasn't completely terrible. Expanding my training set to include more of the artist's work and paintings with lighting that more closely matched the lighting of my photo, as well as tweaking other parameters, I was able to create something that looked less terrible and had less obvious color distortions. However, in trying to imitate brush strokes, the system mangled and discolored my teeth. Once again, this is one of the main reasons that I avoid neural style transfer on photos with faces clearly visible.

Art is hard. Neural style transfer can make some beautiful images (such as the banner for this website) but matching a photo with a proper style for transfer is non-trivial. Much of the weirdness in style transfer is a result of convolutional neural networks seeing photos differently than human minds do. This makes the process of generating neural style art is more of an art than a science (pun intended).